Bridging the gap in study habits: from memorization to retrieval

Abstract

While TAing Plant Physiology (PLB 111) at UC Davis for four years, I ran three discussion sections a week. I identified specific gaps in student performance, particularly in applied problems involving math. Eventually I had the opportunity to teach the class as instructor of record at UC Davis. Among other changes I made to the course, I implemented a gamified web-based tool to address these gaps in student performance. It was called the Midterm Flight Simulator 0.1 (MFS) because it was meant to give students practice taking the midterm. That way it wouldn’t be their first time “taking the midterm” on the real midterm. It was offered as a completely voluntary exercise, expressly worth zero points. In spite of this, almost 90% of the students used the MFS on average. The midterms had comparable math questions to the previous four years, which allowed a comparison across the years. Compared to previous years, students performance improved, performance benchmarks substantially improved. The overall midterm distributions were improved, especially on the math questions.

Introduction

There are ample evidence-based data that demonstrate the value of retrieval practice over rote memorization when learning.1

Quizzes and exams are not just methods of evaluating student performance, but they are in themselves tools for instruction. Students may not agree, but the data say otherwise. In particular, they expressly exercise the mental faculty of retrieval, which is a subtly distinct faculty than memorization. We all have the experience of unsuccessfully trying to remember a fact. Although we have difficulty retrieving the fact, upon hearing it we can confirm the fact (having been able to distinguish wrong answers) and in so doing acknowledge that we knew it all along. This suggests we had no problem memorizing the fact, but it’s the retrieval that is challenging part. And it’s the retrieval that is necessary for the application of knowledge in the real world. This retrieval can be interwoven with several other threads of retrieval–when one thread of retrieval is pulled, they all are pulled together.

In my experience as a TA and Instructor, student study habits predominantly consist of poring over slides (re-reading) and focus on rote memorization. This provides a false sense of confidence, come time for retrieval, as on an exam or in the real world. Flash cards and equivalents (such as the digital version, Anki) are an improvement in study habits since they practice retrieval, but they can be limited to relatively simple things and usually are limited in providing extra assistance. Practicing math problems can be frustrating and students can quickly burn-out. Students often study the solutions to specific math problems instead of trying them out themselves first. Studying the solution to a math problem is akin to rote memorization. And practicing math problems is akin to retrieval practice. Studying how to solve mathematical word problems, however, sometimes requires a little more finesse in assisting students along the way as a way. Internet platforms do not completely replace the value of a personalized in-person tutoring of students, but they allow students do practice problems in their own time for as long or little as they want.

Background of Previous Study Strategies

In the four years that I TAed the class, students were expected to practice math problems with problem sets that had separate answer guides provided to them. They were expected to do these problems at home and check their answers with the answer key. If they had trouble with it, they could go to office hours, discussion section, or send an email to either the TA or the Professor to help them get out of their mathematical impasse. During discussion section, I would go over several of these math problems with the students. But I realized they needed more practice on their own and time would be a constraint during discussion section–they needed to do it in their own time. If I could provide them a more comfortable environment, where they were free to take their time2 and make mistakes without anyone watching, I felt I might be able to help. If I could make it less tedious, it would be even better.

Overview of the Midterm Flight Simulator

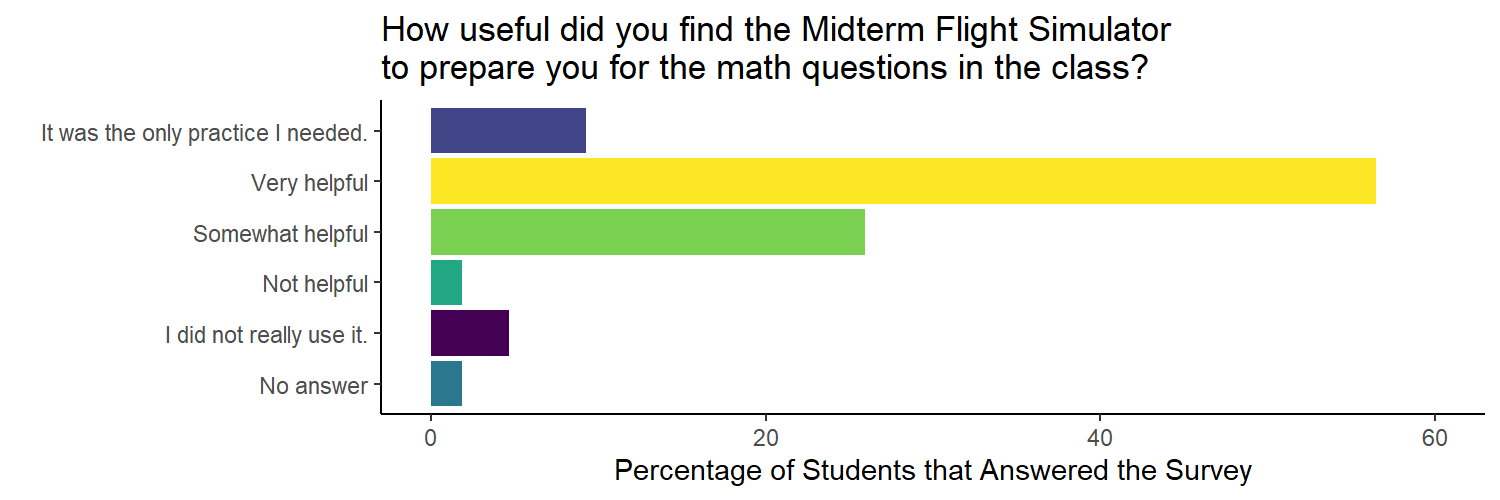

The Midterm Flight Simulator (MFS) is essentially a series of glorified quizzes (through gamification). The MFS provides immediate feedback on correct answers, and assistance (Steering Assistance) to help students try again after an incorrect answer and get on track to a right answer. They were originally designed to help students study mathematical problems in Plant Physiology at UC Davis, but the idea can be applied to several other disciplines, especially with applied math problems.

The MFS was offered to the students as a “free trial” of a service. It was explained, half-jokingly, that “MFS 0.1 was tailored to PLB 111: Plant Physiology by using a generative AI3 specialized in Plant Biology, trained on data from the last 4 years of PLB 111 at UC Davis.”

A disclaimer was given to indicate that the MFS was completely voluntary and worth zero points:

These bite-sized midterms are worth zero points, but they may impact your grade because, if used properly, they may inhibit your forgetting.”

The MFS was offered in conjunction with the same question sets and answer keys that were provided to students in previous years. In prior years, these were also offered for zero points and were completely voluntary.

Results: Widespread Adoption and Measurable Improvements in Concrete Objectives

Please click on each tab for more information:

Student Reception

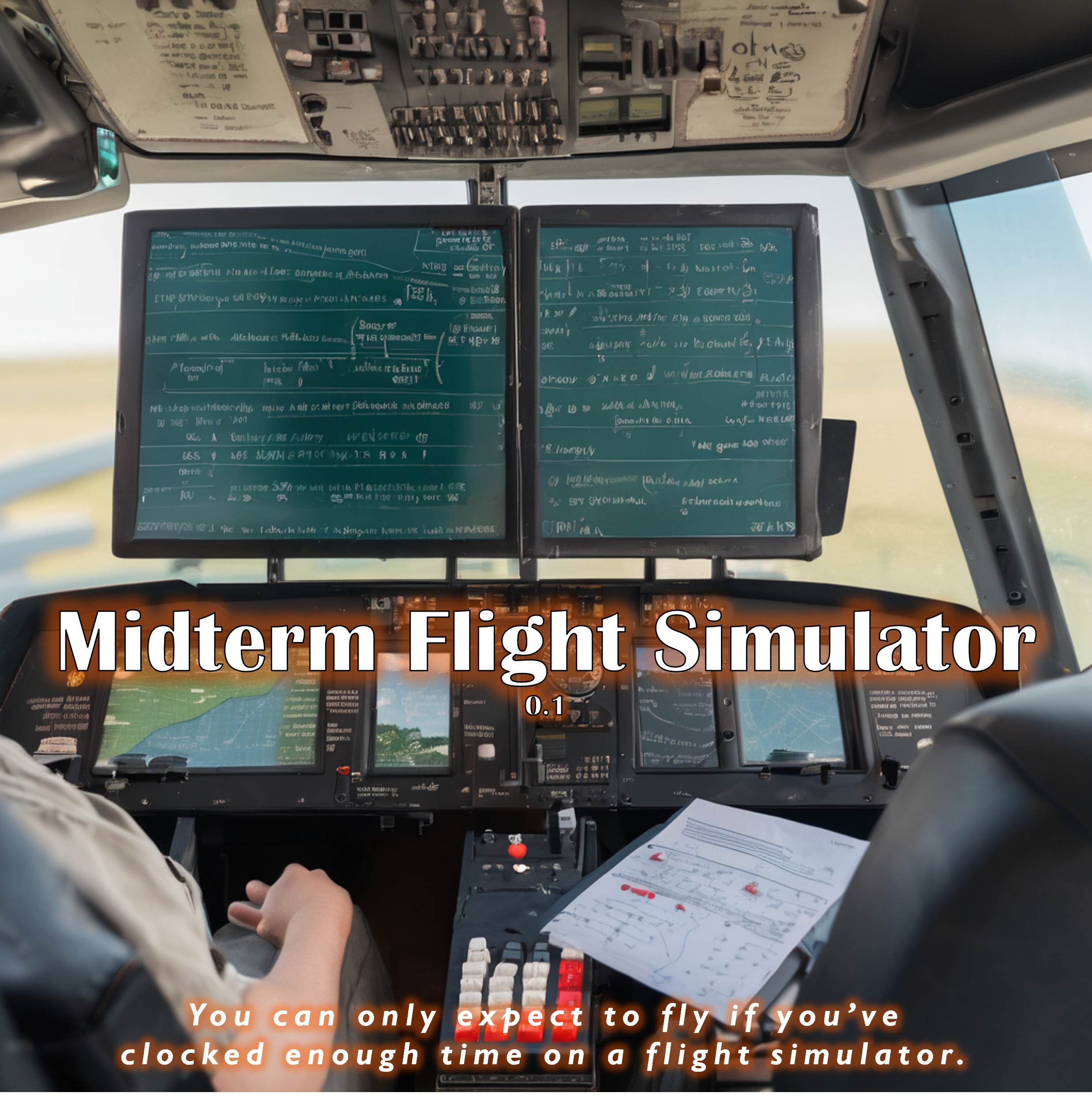

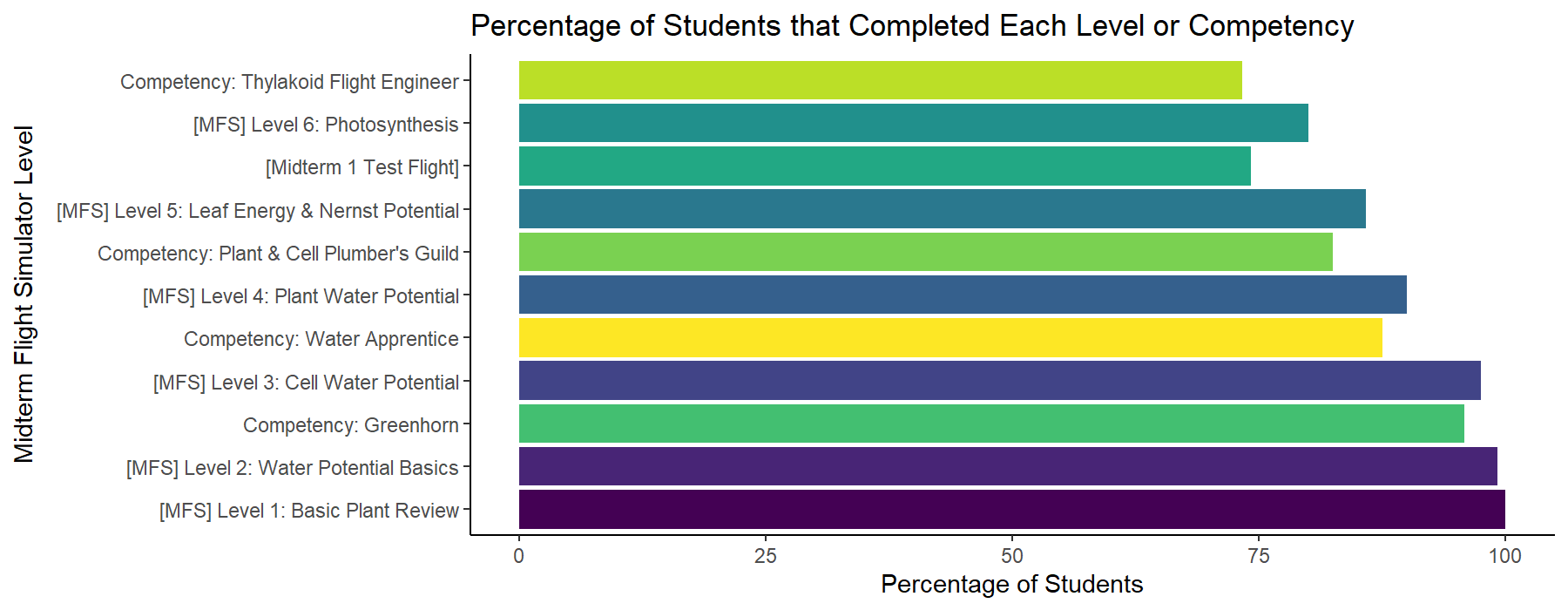

Although the MFS was worth zero points, the average student completion of the different MFS levels was above 88%.

(From a survey completed by 108 of 120 students):

Student Performance

Year after year, different midterm questions tested students ability to solve different kinds of problems starting with different kind of data that was presented in different ways. The questions were very comparable in difficulty and scope.

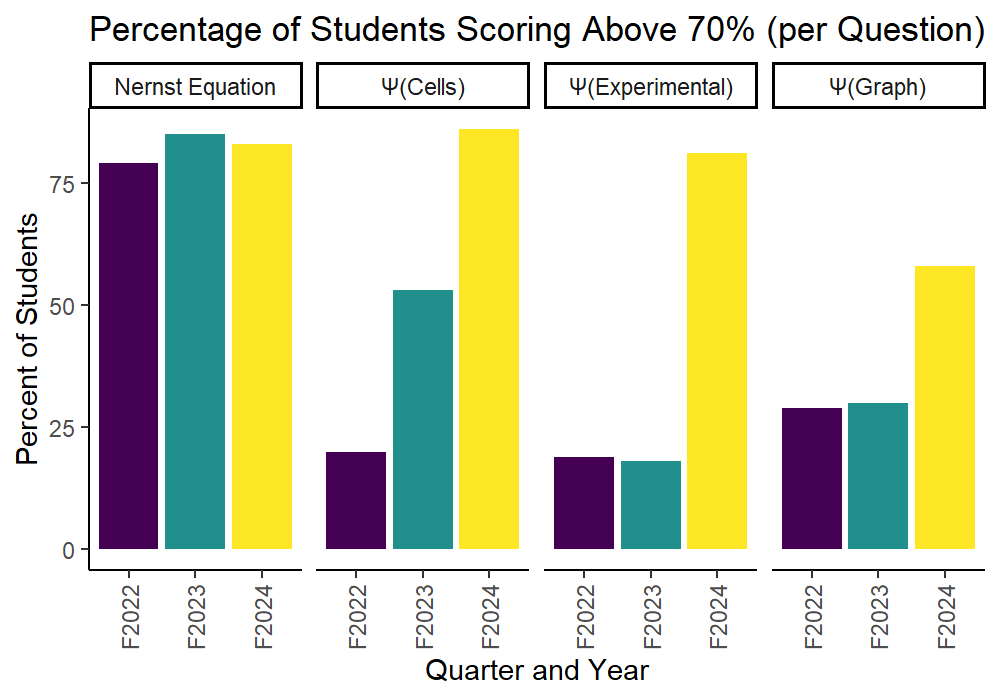

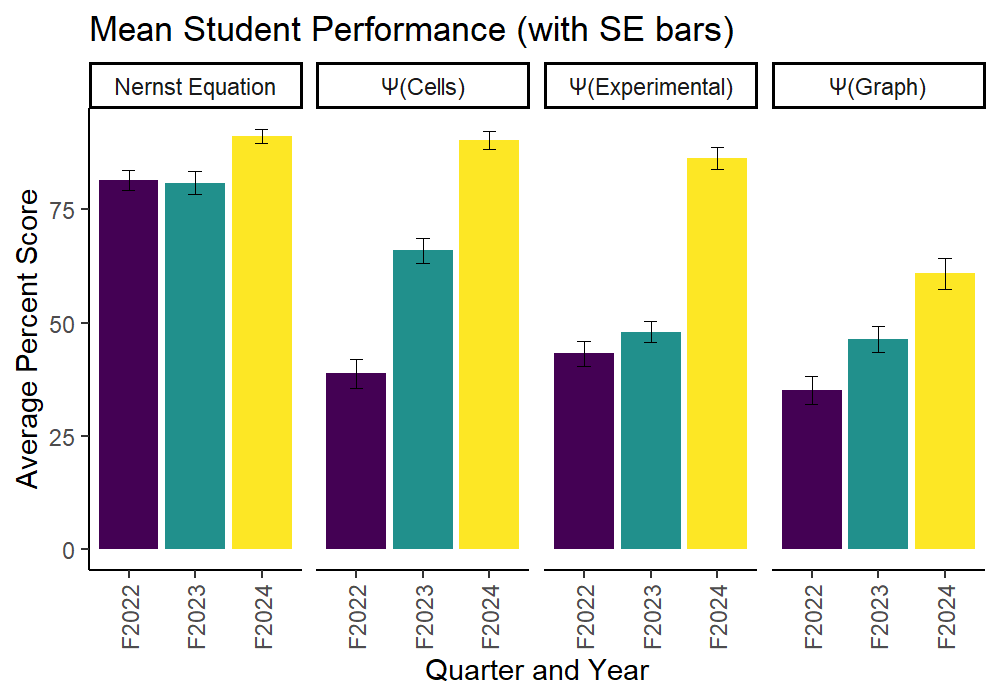

The percentage of students scoring above 70% substantially increased on most of the math questions under consideration.

The scores were significantly improved compared to the last two years, especially with questions on water potential in different contexts.

Screenshots

Footnotes

Brown, Roediger III, & McDaniel, Make It Stick: The Science of Successful Learning.↩︎

They had more time than they would in discussion section for a single problem. But there were still time constraints on the MFS, like many video games would have. The aim of this was to not simply let them open the MFS and leave it running in their browser.↩︎

An asterisk here explained that “AI = Associate Instructor,” which was the name of my position as a graduate student instructor.↩︎